The Amplify UI FaceLivenessDetector is powered by Amazon Rekognition Face Liveness. The FaceLivenessDetector supports two types of verification challenges:

- The Face Movement and Light Challenge consists of a series of head movements and colored light tests.

- The Face Movement Challenge consists only of a series of head movements.

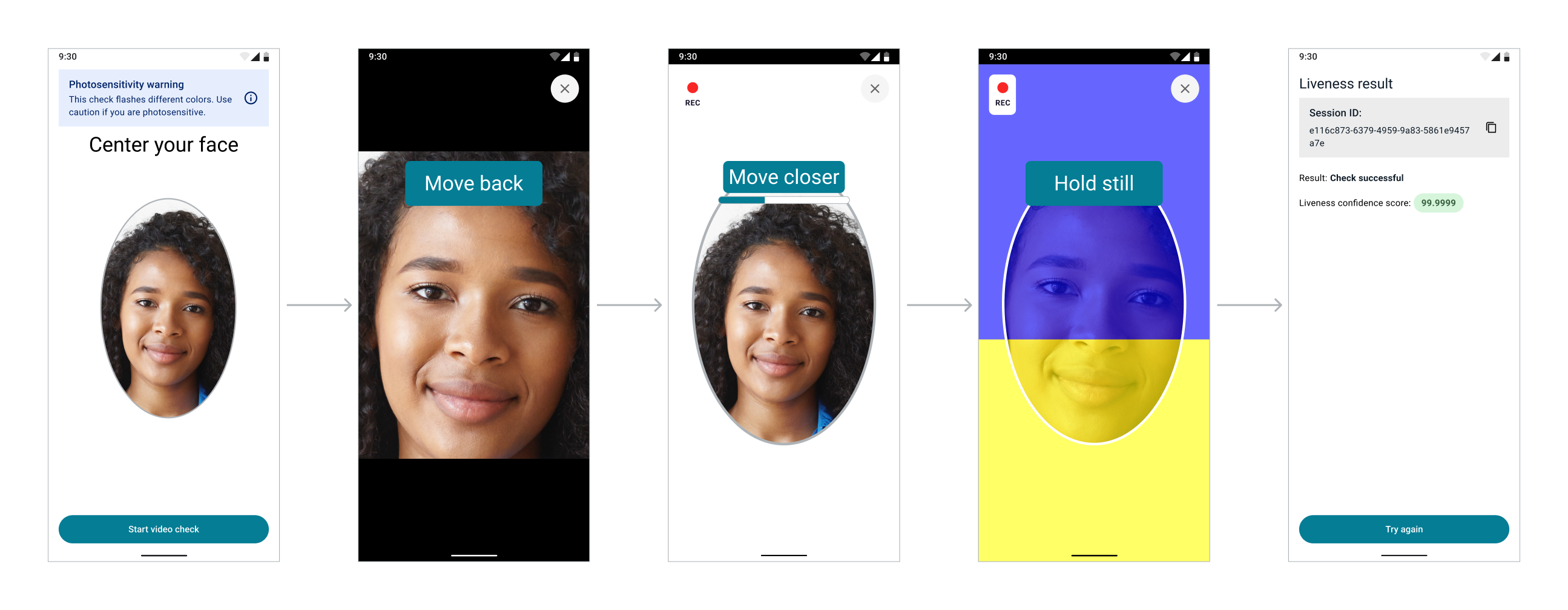

The following screenshots show an example of the Face Movement and Light Challenge in action.

To learn more about spoof attempts deterred by Face Liveness, please see this demonstration video on YouTube.

Quick start

Prerequisites:

- Install and configure the Amplify CLI by following this guide.

- An Android application targeting at least Android API level 24 (Android 7.0). For a full example of creating an Android project, please follow the project setup walkthrough. The Liveness UI component has passed preliminary testing on API level 23 (Android 6.0) with a workaround, but compatibility is not officially supported. If you decide to use Liveness on API level 23, you may encounter issues we can't address.

- A backend that is setup following the Amazon Rekognition Face Liveness developer guide.

Step 1. Configure Auth

There are four methods to setup the Amazon Cognito resources needed for Amplify Auth. The most common is the Amplify CLI create flow which asks a series of questions and will configure both a User Pool and Identity Pool automatically. The second option is the Amplify CLI import flow which adds an existing Cognito resource into Amplify. The third is to reuse or create a Cognito Identity Pool manually and to add it into your application. Finally the fourth is to pass a credentials provider which obtains the AWS credentials under your control.

Note: Using Cognito does not mean that you have to migrate your users. By default, FaceLivenessDetector uses a Cognito Identity Pool, for the sole purpose of signing requests to Rekognition.

Use the Amplify CLI to automatically configure and manage your Cognito Identity and User Pool for you.

FaceLivenessDetector uses Amplify Auth by default to authorize users to perform the Face Liveness check. If you are using Amplify for the first time, follow the instructions for installing the Amplify CLI.

Set up a new Amplify project

$ amplify init

? Enter a name for the project androidliveness

? Initialize the project with the above configuration? No

? Enter a name for the environment dev

? Choose your default editor: Android Studio

? Choose the type of app that you're building android

Please tell us about your project

? Where is your Res directory: app/src/main/res

Add the auth category

$ amplify add auth

Do you want to use the default authentication and security configuration? Manual configuration

Select the authentication/authorization services that you want to use: User Sign-Up, Sign-In, connected with AWS IAM controls (Enables per-user Storage features for images or other content, Analytics, and more)

Provide a friendly name for your resource that will be used to label this category in the project: <default>

Enter a name for your identity pool. <default>

Allow unauthenticated logins? (Provides scoped down permissions that you can control via AWS IAM) Yes

<Choose defaults for the rest of the questions>

Push to create the resources

$ amplify push

✔ Successfully pulled backend environment dev from the cloud.

Current Environment: dev

| Category | Resource name | Operation | Provider plugin |

| -------- | ---------------- | --------- | ----------------- |

| Auth | androidlive••••• | Create | awscloudformation |

If you have an existing backend, run amplify pull to sync your amplifyconfiguration.json with your cloud backend.

You should now have an amplifyconfiguration.json file in your app/src/main/res/raw directory with your latest backend configuration.

Update IAM Role Permissions

Now that you have Amplify Auth setup, follow the steps below to create an inline policy to enable authenticated app users to access Rekognition.

-

Go to AWS IAM console → Roles

-

Select the newly created

unauthRolefor your project (amplify-<project_name>-<env_name>-<id>-unauthRoleif using the Amplify CLI). Note thatunauthRoleshould be used if you are not logging in, but if you are using an authenticator with your application, you will need to useauthRole. -

Choose Add Permissions, then select Create Inline Policy, then choose JSON and paste the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "rekognition:StartFaceLivenessSession",

"Resource": "*"

}

]

}

-

Choose Review Policy

-

Name the policy

-

Choose Create Policy

To use Amplify UI FaceLivenessDetector, you must also set up a backend to create the Face Liveness session and retrieve the session results. Follow the Amazon Rekognition Face Liveness developer guide to set up your backend.

Step 2. Install dependencies

FaceLivenessDetector component is built using Jetpack Compose. Enable Jetpack Compose by adding the following to the android section of your app's build.gradle file:

compileOptions {

// Support for Java 8 features

coreLibraryDesugaringEnabled true

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

buildFeatures {

compose true

}

composeOptions {

kotlinCompilerExtensionVersion '1.5.3'

}

Add the following dependencies to your app's build.gradle file and click "Sync Now" when prompted:

dependencies {

// FaceLivenessDetector dependency

implementation 'com.amplifyframework.ui:liveness:1.5.0'

// Amplify Auth dependency (unnecessary if using your own credentials provider)

implementation 'com.amplifyframework:aws-auth-cognito:2.29.0'

// Material3 dependency for theming FaceLivenessDetector

implementation 'androidx.compose.material3:material3:1.1.2'

// Support for Java 8 features

coreLibraryDesugaring 'com.android.tools:desugar_jdk_libs:1.1.5'

}

Step 3. Initialize Amplify Auth

In the onCreate of your Application class, add the Auth plugin before calling Amplify.configure.

// Add these lines to include the Auth plugin.

Amplify.addPlugin(AWSCognitoAuthPlugin())

Amplify.configure(applicationContext)

// Add these lines to include the Auth plugin.

Amplify.addPlugin(new AWSCognitoAuthPlugin());

Amplify.configure(getApplicationContext());

Follow the Amplify Android documentation to start using Amplify for RxJava (RxAmplify).

// Add these lines to include the Auth plugin.

RxAmplify.addPlugin(new AWSCognitoAuthPlugin());

RxAmplify.configure(getApplicationContext());

Step 4. Request camera permissions

FaceLivenessDetector requires access to the camera on the user's device in order to perform the Face Liveness check. Before displaying FaceLivenessDetector, prompt the user to grant camera permission. Please follow these guides for examples of requesting camera permission using either Android or Jetpack Compose.

Step 5. Add FaceLivenessDetector

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Face Liveness color scheme. More information about theming is in the Face Liveness Customization page.

setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the session

// results are ready. Use your backend to retrieve the

// results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such as

// time out or missing the required permissions.

}

)

}

}

FaceLivenessDetector must be created in Kotlin but can still be used in a Java-based app. First, create a new Kotlin file called MyView and add the following code to create FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Liveness color scheme. More information about theming is in the Liveness Customization page.

object MyView {

fun setViewContent(activity: ComponentActivity) {

activity.setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the

// session results are ready. Use your backend to

// retrieve the results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such

// as time out or missing the required permissions.

}

)

}

}

}

}

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector:

MyView.setViewContent(this);

If you previously had unmanaged resources that you want to manage with Amplify you can use the CLI to import your Cognito resources.

FaceLivenessDetector uses Amplify Auth by default to authorize users to perform the Face Liveness check. Follow the instructions for importing existing resources.

Update IAM Role Permissions

Now that you have Amplify Auth setup, follow the steps below to create an inline policy to enable authenticated app users to access Rekognition.

-

Go to AWS IAM console → Roles

-

Select the newly created

unauthRolefor your project (amplify-<project_name>-<env_name>-<id>-unauthRoleif using the Amplify CLI). Note thatunauthRoleshould be used if you are not logging in, but if you are using an authenticator with your application, you will need to useauthRole. -

Choose Add Permissions, then select Create Inline Policy, then choose JSON and paste the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "rekognition:StartFaceLivenessSession",

"Resource": "*"

}

]

}

-

Choose Review Policy

-

Name the policy

-

Choose Create Policy

To use Amplify UI FaceLivenessDetector, you must also set up a backend to create the Face Liveness session and retrieve the session results. Follow the Amazon Rekognition Face Liveness developer guide to set up your backend.

Step 2. Install dependencies

FaceLivenessDetector component is built using Jetpack Compose. Enable Jetpack Compose by adding the following to the android section of your app's build.gradle file:

compileOptions {

// Support for Java 8 features

coreLibraryDesugaringEnabled true

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

buildFeatures {

compose true

}

composeOptions {

kotlinCompilerExtensionVersion '1.5.3'

}

Add the following dependencies to your app's build.gradle file and click "Sync Now" when prompted:

dependencies {

// FaceLivenessDetector dependency

implementation 'com.amplifyframework.ui:liveness:1.5.0'

// Amplify Auth dependency (unnecessary if using your own credentials provider)

implementation 'com.amplifyframework:aws-auth-cognito:2.29.0'

// Material3 dependency for theming FaceLivenessDetector

implementation 'androidx.compose.material3:material3:1.1.2'

// Support for Java 8 features

coreLibraryDesugaring 'com.android.tools:desugar_jdk_libs:1.1.5'

}

Step 3. Initialize Amplify Auth

In the onCreate of your Application class, add the Auth plugin before calling Amplify.configure.

// Add these lines to include the Auth plugin.

Amplify.addPlugin(AWSCognitoAuthPlugin())

Amplify.configure(applicationContext)

// Add these lines to include the Auth plugin.

Amplify.addPlugin(new AWSCognitoAuthPlugin());

Amplify.configure(getApplicationContext());

Follow the Amplify Android documentation to start using Amplify for RxJava (RxAmplify).

// Add these lines to include the Auth plugin.

RxAmplify.addPlugin(new AWSCognitoAuthPlugin());

RxAmplify.configure(getApplicationContext());

Step 4. Request camera permissions

FaceLivenessDetector requires access to the camera on the user's device in order to perform the Face Liveness check. Before displaying FaceLivenessDetector, prompt the user to grant camera permission. Please follow these guides for examples of requesting camera permission using either Android or Jetpack Compose.

Step 5. Add FaceLivenessDetector

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Face Liveness color scheme. More information about theming is in the Face Liveness Customization page.

setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the session

// results are ready. Use your backend to retrieve the

// results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such as

// time out or missing the required permissions.

}

)

}

}

FaceLivenessDetector must be created in Kotlin but can still be used in a Java-based app. First, create a new Kotlin file called MyView and add the following code to create FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Liveness color scheme. More information about theming is in the Liveness Customization page.

object MyView {

fun setViewContent(activity: ComponentActivity) {

activity.setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the

// session results are ready. Use your backend to

// retrieve the results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such

// as time out or missing the required permissions.

}

)

}

}

}

}

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector:

MyView.setViewContent(this);

Use this option if you already have a Cognito identity/user pools that you do not want to import to Amplify, or want to manage Cognito resources yourself or with a 3rd party resource management tool.

If you already have Cognito set up or do not want to use the Amplify CLI to generate Cognito resources, you can follow the documentation in the existing resources tab.

If you are manually setting up an identity pool in the Cognito console you can follow this guide. When setting up the identity pool ensure that access to unauthenticated identities is enabled.

When initially configuring Amplify (assuming you are using no pieces of Amplify other than the FaceLivenessDetector) you can manually create an amplifyconfiguration.json file in app/src/main/res/raw of this form:

{

"auth": {

"plugins": {

"awsCognitoAuthPlugin": {

"CredentialsProvider": {

"CognitoIdentity": {

"Default": {

"PoolId": "us-east-1:-------------",

"Region": "us-east-1"

}

}

}

}

}

}

}

Update IAM Role Permissions

Now that you have Amplify Auth setup, follow the steps below to create an inline policy to enable authenticated app users to access Rekognition.

-

Go to AWS IAM console → Roles

-

Select the newly created

unauthRolefor your project (amplify-<project_name>-<env_name>-<id>-unauthRoleif using the Amplify CLI). Note thatunauthRoleshould be used if you are not logging in, but if you are using an authenticator with your application, you will need to useauthRole. -

Choose Add Permissions, then select Create Inline Policy, then choose JSON and paste the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "rekognition:StartFaceLivenessSession",

"Resource": "*"

}

]

}

-

Choose Review Policy

-

Name the policy

-

Choose Create Policy

To use Amplify UI FaceLivenessDetector, you must also set up a backend to create the Face Liveness session and retrieve the session results. Follow the Amazon Rekognition Face Liveness developer guide to set up your backend.

Step 2. Install dependencies

FaceLivenessDetector component is built using Jetpack Compose. Enable Jetpack Compose by adding the following to the android section of your app's build.gradle file:

compileOptions {

// Support for Java 8 features

coreLibraryDesugaringEnabled true

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

buildFeatures {

compose true

}

composeOptions {

kotlinCompilerExtensionVersion '1.5.3'

}

Add the following dependencies to your app's build.gradle file and click "Sync Now" when prompted:

dependencies {

// FaceLivenessDetector dependency

implementation 'com.amplifyframework.ui:liveness:1.5.0'

// Amplify Auth dependency (unnecessary if using your own credentials provider)

implementation 'com.amplifyframework:aws-auth-cognito:2.29.0'

// Material3 dependency for theming FaceLivenessDetector

implementation 'androidx.compose.material3:material3:1.1.2'

// Support for Java 8 features

coreLibraryDesugaring 'com.android.tools:desugar_jdk_libs:1.1.5'

}

Step 3. Initialize Amplify Auth

In the onCreate of your Application class, add the Auth plugin before calling Amplify.configure.

// Add these lines to include the Auth plugin.

Amplify.addPlugin(AWSCognitoAuthPlugin())

Amplify.configure(applicationContext)

// Add these lines to include the Auth plugin.

Amplify.addPlugin(new AWSCognitoAuthPlugin());

Amplify.configure(getApplicationContext());

Follow the Amplify Android documentation to start using Amplify for RxJava (RxAmplify).

// Add these lines to include the Auth plugin.

RxAmplify.addPlugin(new AWSCognitoAuthPlugin());

RxAmplify.configure(getApplicationContext());

Step 4. Request camera permissions

FaceLivenessDetector requires access to the camera on the user's device in order to perform the Face Liveness check. Before displaying FaceLivenessDetector, prompt the user to grant camera permission. Please follow these guides for examples of requesting camera permission using either Android or Jetpack Compose.

Step 5. Add FaceLivenessDetector

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Face Liveness color scheme. More information about theming is in the Face Liveness Customization page.

setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the session

// results are ready. Use your backend to retrieve the

// results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such as

// time out or missing the required permissions.

}

)

}

}

FaceLivenessDetector must be created in Kotlin but can still be used in a Java-based app. First, create a new Kotlin file called MyView and add the following code to create FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Liveness color scheme. More information about theming is in the Liveness Customization page.

object MyView {

fun setViewContent(activity: ComponentActivity) {

activity.setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the

// session results are ready. Use your backend to

// retrieve the results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such

// as time out or missing the required permissions.

}

)

}

}

}

}

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector:

MyView.setViewContent(this);

Use this option if you want more control over the process of obtaining AWS credentials.

By default, FaceLivenessDetector uses Amplify Auth to authorize users to perform the Face Liveness check. You can use your own credentials provider to retrieve credentials from Amazon Cognito or assume a role with Amazon STS, for example:

class MyCredentialsProvider : AWSCredentialsProvider<AWSCredentials> {

override fun fetchAWSCredentials(

onSuccess: Consumer<AWSCredentials>,

onError: Consumer<AuthException>

) {

// Fetch the credentials

// Note that these credentials must be of type AWSTemporaryCredentials

// And then call `onSuccess.accept(creds)`

}

}

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

credentialsProvider = MyCredentialsProvider(),

onComplete = {

Log.i("MyApp", "Liveness flow is complete")

},

onError = { error ->

Log.e("MyApp", "Error during Liveness flow", error)

}

)

Note: The provided Credentials Provider's fetchAWSCredentials function is called once at the start of the liveness flow, with no token refresh.

Update IAM Role Permissions

Now that you have Amplify Auth setup, follow the steps below to create an inline policy to enable authenticated app users to access Rekognition.

-

Go to AWS IAM console → Roles

-

Select the newly created

unauthRolefor your project (amplify-<project_name>-<env_name>-<id>-unauthRoleif using the Amplify CLI). Note thatunauthRoleshould be used if you are not logging in, but if you are using an authenticator with your application, you will need to useauthRole. -

Choose Add Permissions, then select Create Inline Policy, then choose JSON and paste the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "rekognition:StartFaceLivenessSession",

"Resource": "*"

}

]

}

-

Choose Review Policy

-

Name the policy

-

Choose Create Policy

To use Amplify UI FaceLivenessDetector, you must also set up a backend to create the Face Liveness session and retrieve the session results. Follow the Amazon Rekognition Face Liveness developer guide to set up your backend.

Step 2. Install dependencies

FaceLivenessDetector component is built using Jetpack Compose. Enable Jetpack Compose by adding the following to the android section of your app's build.gradle file:

compileOptions {

// Support for Java 8 features

coreLibraryDesugaringEnabled true

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

buildFeatures {

compose true

}

composeOptions {

kotlinCompilerExtensionVersion '1.5.3'

}

Add the following dependencies to your app's build.gradle file and click "Sync Now" when prompted:

dependencies {

// FaceLivenessDetector dependency

implementation 'com.amplifyframework.ui:liveness:1.5.0'

// Material3 dependency for theming FaceLivenessDetector

implementation 'androidx.compose.material3:material3:1.1.2'

// Support for Java 8 features

coreLibraryDesugaring 'com.android.tools:desugar_jdk_libs:1.1.5'

}

Step 3. Request camera permissions

FaceLivenessDetector requires access to the camera on the user's device in order to perform the Face Liveness check. Before displaying FaceLivenessDetector, prompt the user to grant camera permission. Please follow these guides for examples of requesting camera permission using either Android or Jetpack Compose.

Step 4. Add FaceLivenessDetector

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Face Liveness color scheme. More information about theming is in the Face Liveness Customization page.

setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the session

// results are ready. Use your backend to retrieve the

// results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such as

// time out or missing the required permissions.

}

)

}

}

FaceLivenessDetector must be created in Kotlin but can still be used in a Java-based app. First, create a new Kotlin file called MyView and add the following code to create FaceLivenessDetector, replacing <session ID> with the session ID returned from creating the Face Liveness session and replacing <region> with the region you would like to use for the Face Liveness check. The list of supported regions is in the Amazon Rekognition Face Liveness developer guide. The code below wraps FaceLivenessDetector in a MaterialTheme that uses the Liveness color scheme. More information about theming is in the Liveness Customization page.

object MyView {

fun setViewContent(activity: ComponentActivity) {

activity.setContent {

MaterialTheme(

colorScheme = LivenessColorScheme.default()

) {

FaceLivenessDetector(

sessionId = <session ID>,

region = <region>,

onComplete = {

Log.i("MyApp", "Face Liveness flow is complete")

// The Face Liveness flow is complete and the

// session results are ready. Use your backend to

// retrieve the results for the Face Liveness session.

},

onError = { error ->

Log.e("MyApp", "Error during Face Liveness flow", error)

// An error occurred during the Face Liveness flow, such

// as time out or missing the required permissions.

}

)

}

}

}

}

In the onCreate of your app's MainActivity, add the following code to display FaceLivenessDetector:

MyView.setViewContent(this);

See Rekognition documentation for best practices when using

FaceLivenessDetector.

Full API Reference

FaceLivenessDetector Parameters

Below is the full list of parameters that can be used with the FaceLivenessDetector component. You can also reference the source code here.

| Name | Description | Type |

|---|---|---|

| sessionID | The sessionID as returned by CreateFaceLivenessSession API. | |

| region | The AWS region to stream the video to, this should match the region you called the CreateFaceLivenessSession API in. | |

| credentialsProvider | An optional parameter that provides AWS Credentials. | |

| disableStartView | Optional parameter for the disabling the intial view with instructions, default = false. | |

| challengeOptions | Optional parameter that allows you to configure options for a given liveness challenge. | |

| onComplete | Callback that signals when the liveness session has completed. | |

| onError | Callback that signals when the liveness session has returned an error. | |

Error States

Below is the full list of error states that can be returned from the onComplete handler of FaceLivenessDetector. You can also reference the source code here.

| Name | Description | Type |

|---|---|---|

| FaceLivenessDetectionException | An unknown error occurred, retry the face liveness check. | |

| FaceLivenessDetectionException.SessionNotFoundException | Session not found. | |

| FaceLivenessDetectionException.AccessDeniedException | Not authorized to perform a face liveness check. | |

| FaceLivenessDetectionException.CameraPermissionDeniedException | The camera permission has not been granted. | |

| FaceLivenessDetectionException.SessionTimedOutException | The session timed out and did not receive response from server within the time limit. | |

| FaceLivenessDetectionException.UnsupportedChallengeTypeException | The backend sent a liveness challenge that is not supported by the library. | |

| FaceLivenessDetectionException.UserCancelledException | The user cancelled the face liveness check. | |